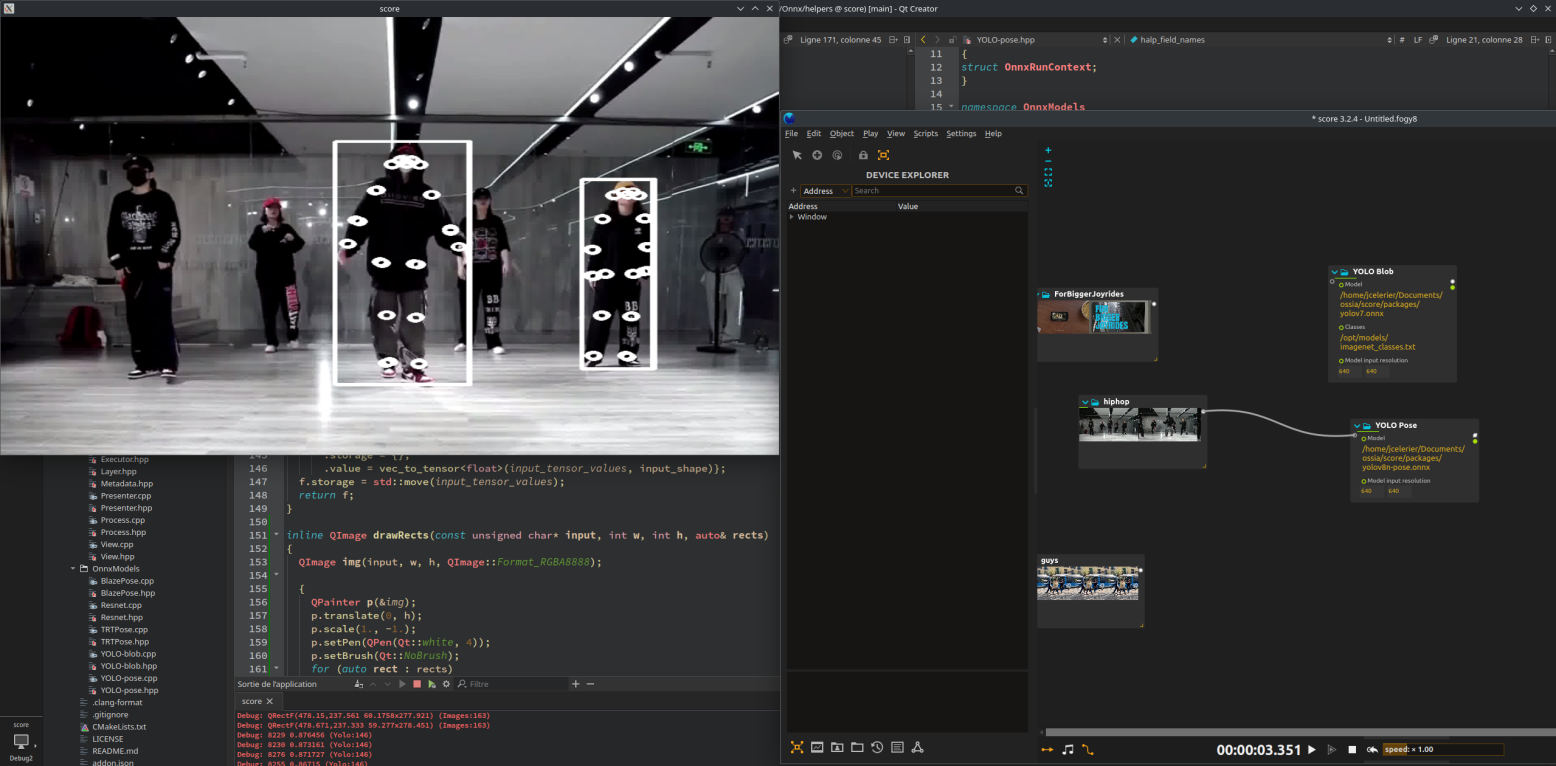

AI Recognition

How to run ONNX models in score

1. Drop an AI model process in your timeline

Let’s try with BlazePose Detector. It can read from a camera or image input texture, run the model, and output results in real-time.

2. Download and load model

3. Add an Input camera device (or video)

Choose a camera

Alternatively, drag & drop a video file into score, and connect it to the AI model process.

4. Add a window device for output

Adjust the output window size to match your model’s output.

5. Play

Add a trigger if you want the process to loop continuously.

6. Extract keypoints

Each AI model outputs keypoints in a specific format.

For BlazePose, refer to the official documentation:

To extract keypoints like wrist or nose, use the Object Filter process to filter these fields.

- Left wrist =

.keypoints[15].position[] - Right wrist =

.keypoints[16].position[] - Nose =

.keypoints[0].position[]

For other models like RTMPose, here is the 26-keypoint layout:

7. Use keypoints

Once keypoints are extracted, you can connect them to any parameter in score for interactive control.

Example usage of keypoints

You can also send keypoints over OSC to external tools like Wekinator for gesture recognition or AI-based interaction.

- Use an Object Filter to extract:

- left wrist, right wrist, nose (x, y, z) with the following formula :

[ .keypoints[16].position[], .keypoints[15].position[], .keypoints[0].position[] ]

- left wrist, right wrist, nose (x, y, z) with the following formula :

-

Combine them into a 9-value OSC message using the Array Combinor process

- Send the OSC message to

wekinator:/wek/inputs

This can be used to trigger visuals, audio, shaders, and other cool things!