ossia score 3 beta

Introducing ossia score 3

ossia score is an interactive and intermedia sequencer: it allows to score multimedia content and processes in a timeline, such as sound, MIDI, OSC messages, video, VST plug-ins, scripts, with a specifically tailored visual language. It has two defining features: first, the signal processing being applied can evolve over time. The first half of a score can have a given set of effects applied on sound, video… , and the second part of the score, a completely different set of effects being applied. Second, the timeline can encode interactivity: it is possible to state that a part of the score will only execute when someone touches a control or does something on stage, while others are kept in check with the musical beat, and then resynchronize these multiple competing timelines afterwards.

Version 3 fulfills the long-term goal of intermedia score authoring of the ossia project, by allowing our timeline to combine state-of-the-art audio, control and video processing pipelines in a single integrated system.

Where the version 2 focused on integrating audio processing to the execution engine, version 3’s defining feature are video processing & playback support, which opens ossia score to the VJ world, and musical metrics support, which enables its use in works requiring tempo and beats.

The beta is freely downloadable. We aim for a final release in early autumn, when all the bugs are ironed out during the beta testing cycle.

This post will present the new major features, and then sketch the way to version 4.

The road since score 2.0

This release cycle has been exceptionally long ; thankfully, we are nearing the end:

- The last stable version, 2.5.2, was released on August 26, 2019, almost two years ago.

- The first 3.0.0 alpha was released on February 10, 2020.

So, what did happen during these two years, totalling 1920 commits of work, since version 2.5 ? Let’s take a look at it !

A metric ton of user interface work

An image speaks a thousand words:

August 28, 2017 to August 17, 2021 😊 thanks to all the fine people involved ! @ossia_io #intermedia #ossia #ossiascore #musicsoftware #newmediaart #floss #opensource #freesoftware pic.x.com/ROQocR1ShD

— jm (@jcelerie) August 17, 2021

Many thanks in particular to Akané who spent a lot of time improving colours, sizes, positioning, etc. to make everything much more harmonious, pretty, and enjoyable to use.

Proper documentation

Julien and Thibaud contributed a neat documentation for version 3, which was sorely missing in the prior versions.

In particular, a lot of techniques are described in the first two chapters, which act like a tutorial of sorts:

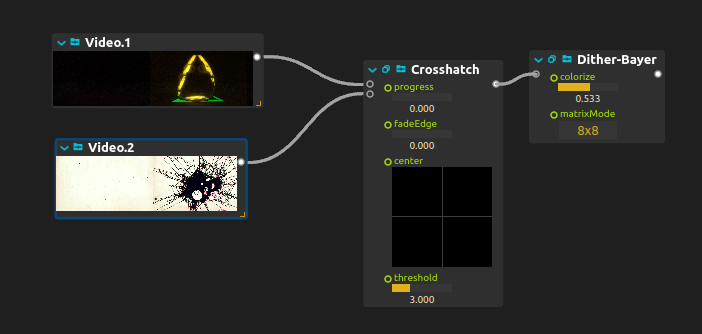

Node-based view

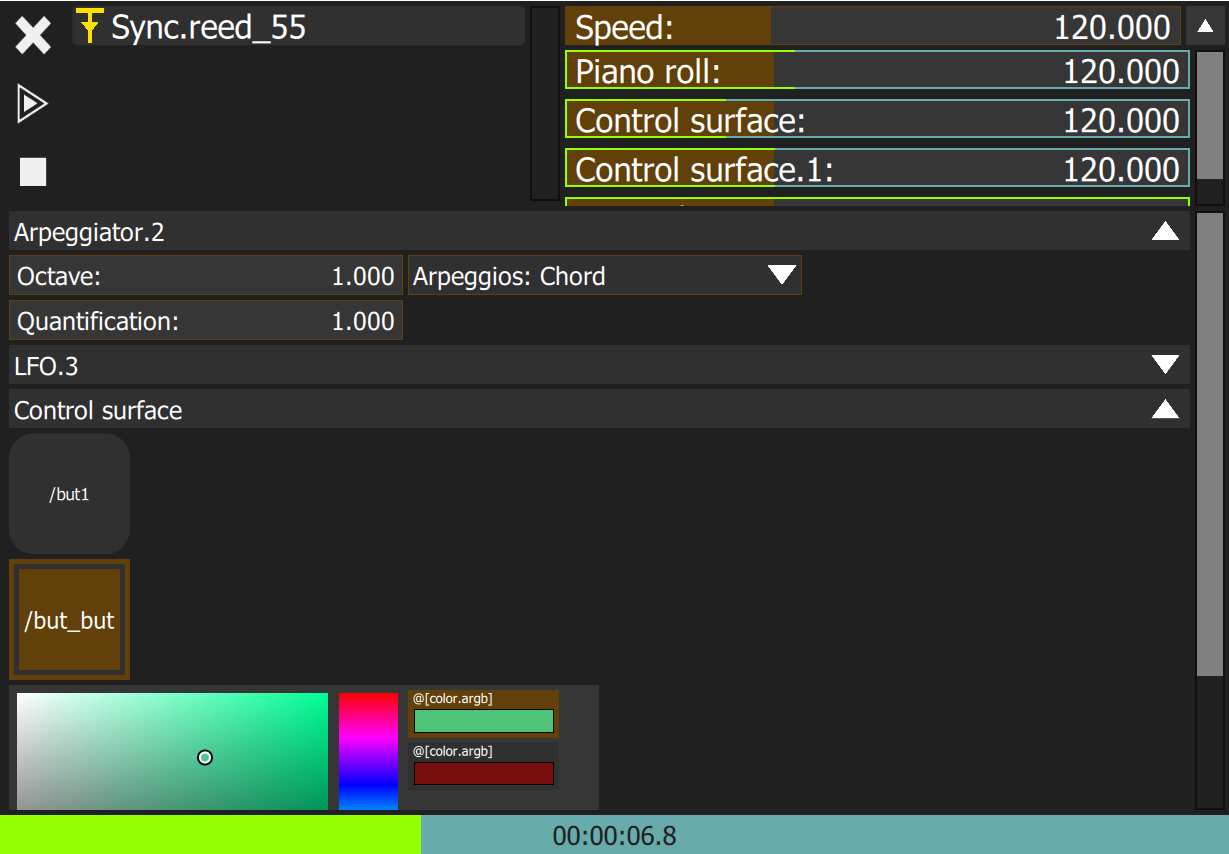

Intervals can now be viewed in nodal mode to easily interconnect their processes, with a switch in the transport bar. Purely non-temporal processes (VSTs, etc.) will always show up as a small nodal slot in intervals.

Video pipeline

We developed a complete GPU-based video processing pipeline, which allows to generate, transform, filter, and modify visuals without affecting CPU usage.

- FFMPEG is used for decoding videos, which means that most formats should be supported. Additionally, the HAP codec is independently supported, to ensure that we get the most out of the file format and decode it entirely on GPU.

- We support applying shader-based filters on these videos and editing these shaders live, during the execution of a score. Interactive Shader Format, the well-known Vidvox technology is used as the main shader system.

- The render graph is based on Qt RHI: it is possible to leverage Vulkan, OpenGL, Metal, or Direct3D for rendering. For version 3, only OpenGL will be officially supported, but it is easy to try the other APIs in the application settings.

- Standard camera input is supported ; proper Spout and Syphon support are targeted for a 3.x release. NDI input and output is being developed in a separate add-on which will be shipped as part of the package manager.

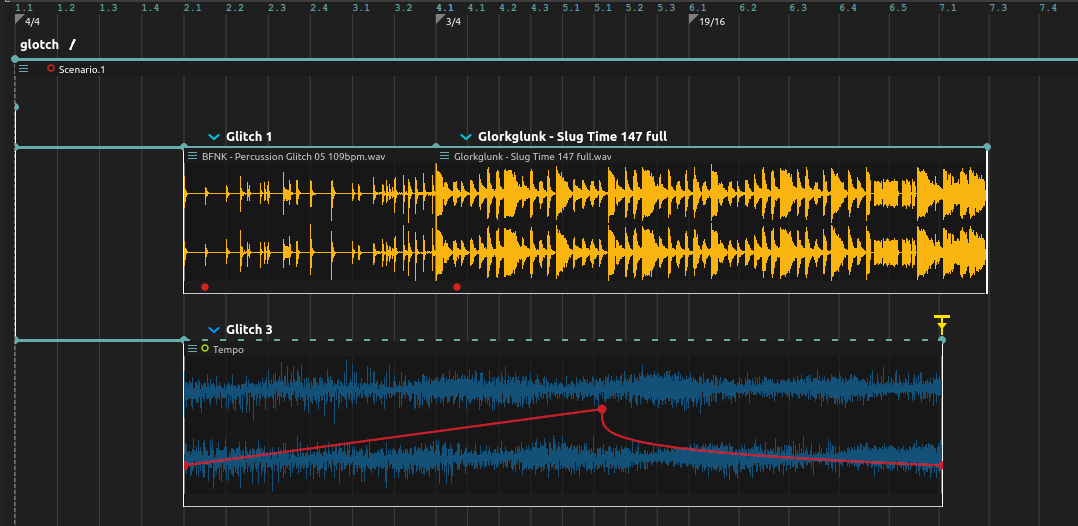

Musical metrics and quantization

Support for tempo, musical bars, and quantization has been introduced, as well as a snap-on-grid system.

- Time-streching is supported through different algorithms (resampling with libsamplerate, pitch-preserving timestretch with RubberBand).

- It is now possible to sync the triggering of a trigger, to the beginning of the following bar for instance.

- Poly-rhythm and poly-tempo are supported: each interval can have its own tempo and metrics timeline which applies recursively to its children. For instance, the parent scenario can be in a 4/4 metric, while a child scenario can be at 3/4.

- It is also possible to play and stop any interval during execution in a quantized way, to enable live-looping workflows.

Presets

A preset mechanism has been introduced for all processes, to allow to save and restore processes’s state easily and to allow to create a great user library of presets.

If you make cool presets, you are more than welcome to contribute them to the official repository !

Graph intervals

This is the main breaking change in ossia score 3: in version 1 and 2, looping was handled by a specific process. In version 3, we redid that in a much more general way, by allowing to create a new type of interval which can cycle back to any other part of the score.

This means that it is now possible to create state-machine-like scores, where one part plays after the other, which then goes back to the first part, which is particularly useful for installations, and more generally very arbitrary changes and loopings in a score, we look forward to what you are going to create with it !

Package manager

In the settings, the “Package” tab allows to download add-ons and media packs.

Pure Data integration

Thanks to the libpd project, we are able to provide a fairly complete integration of Pure Data patches as score processes. Read more on the documentation !

C++ JIT support

JIT compilation support allows to live-code C++ extensions directly from within the software, just like it was possible with the Javascript process, but with greatly improved performance (and stronger real-time guarantees).

This will be an experimental feature for the 3.0 release cycle.

Along with that add-on, comes the upcoming stable plug-in API, currently being worked on here. The end goal is to ship plug-ins as source form in the package manager: this will allow them to be compiled knowing the user’s host CPU’s capabilities, thus giving peak performance by leveraging SSE, AVX, etc. whenever possible.

For instance, Maria Paula implemented the audio oracle and VMO improvisation algorithms in C++ as score plug-ins, which are then going to be integrated through the package manager:

Théo Barbé has been working on a video mapping plug-in, and Arthur Fourcadet on a GPU particles system ; both will also be released as add-ons.

Remote control

The remote control API, introduced at the end of 2.5.2, has been leveraged by a team of students to create a neat app for tablets, which can be used to control parts of the score from a mobile device very easily. Our intern Antoine has been improving it over the summer in order to make it useable from web browsers directly. More on this in a future blog post !

Smaller improvements

- We added a built-in looping feature for individual processes, to easily loop audio files by simply extending them, like in most audio sequencers.

- Many small and useful processes have been introduced, go discover them in the docs !

- Intervals can act as mixing busses, available in a panel at the bottom.

- Integration of the OLA DMX fixture library, to allow to automate lighting hardware more easily.

- Official support for running score on Raspberry Pi 3 and 4, with an official release build. This build is able to use EGLFS to show video content directly in the most performant way for art installations.

Roadmap to v4

The main work for v4 will concentrate on a few major things: a stable plug-in API, MIDI 2.0, user interface improvements for the authoring and scripting, and recording. In addition, as usual, a lot of small-ish new features and improvements may make their way into the software :-)

In particular, most of the project management has moved to Github Projects: the tools is far from perfect but allows to very easily link project steps to issues and centralizes things very efficiently.

Proper Unreal Engine integration

The work done since 2019 has been achieved thanks to a generous MegaGrant from Epic, allowing Jean-Michaël to work full-time on improving score in order to provide an integration with Unreal Engine.

Part of that work has gone into making a prototype of libossia integration in UE, which did not work and required a refactor of all the network internals of libossia, from a threaded model where every protocol was living its life freely in one or multiple threads, to a much simple event-based model leveraging Boost.Asio, which will more easily integrate into the Unreal event loop.

This refactor is pretty much finished and will now enable work on making a library version of score which will then be embeddable in UE and other creative coding environments ; what remains to be specified technically is the efficient passing of sound and video data to other environments, especially when GPU processing is involved.

Stable plug-in API

The v3 to v4 release cycle will be about stabilizing things: we aim to finish the work on the execution engine to make sure that it is possible to write score plug-ins without any compromise in performance, in order to create a lasting and vibrant ecosystem of extensions to score.

As such, a lot of work is going into defining the ideal plug-in API ; the previous blog post gives hints at the path we are taking for that API.

Support for MIDI 2.0

Multiple ossia.io members are part of the Intermedia Mapping and Scripting MIDI Special Interest Group. As part of the SIG, our mission is to find ways to make sure that MIDI 2.0 is a first-class protocol for all intermedia needs ; the main way to do this will be to first try to implement MIDI 2.0 in libossia and score, and see what is missing or problematic to give recommendations to the MIDI SIG.

Timeline UX improvements

This concerns all the improvements to the central score view, in order to make it as easy as possible to author temporal content.

Things that are being evaluated for the version 4 are for instance:

- Better support for sequences of automations and controls on the same parameters.

- Track-like processes for sound and MIDI data, to allow applying effects more easily to a sequence of sounds.

- Support for saving and reloading presets of processes as part of the timeline.

- Parametric processes and variables: how to have a “configurable” process preset. That would enable for instance having a scenario preset for controlling a parameter with multiple automations and states, and being able to change the address being controlled by that preset in a single place in the user interface.

- Hierarchical data support for types other than sound: for instance, automatically mixing video content in the parent like it is being done for sound right now.

The actual ideas are being put in our project board, everyone is free to participate by requesting features on the forum or on the chat :-)

Proper recording mechanism

Right now we only support a mechanism for recording data-like addresses from the device explorer to the score: OSC messages, MIDI controls, etc.

The goal for the version 4 is to work on a unified system for recording all kinds of media: sound, MIDI notes, video. This needs a better implementation than what we have now - the current recording facilities were implemented back in 2015 for the sake of a deadline and have not been touched since. In particular, they are not integrated at all with the execution data flow.

Longer term roadmap

The long-term vision for ossia score has one definite use-case: it should ultimately be possible to:

- Open a web browser to our web version.

- Join a collaborative scoring session, edit sound, video, etc.

- Press “play”, and have the score’s execution on every browser synchronize the way it was specified by the composers: for instance, a first interval plays on user 1’s browser, and a second interval plays on user 2’s browser ; user 1 receives the sound that is generated by that interval, processes it, and sends it back to user 2.

- Press “export”, and get a bundled webassembly player, which contains a compiled and optimized version of the score, either for instance as a native Windows / Mac / Linux executable, or as a WASM bundle that can then be embedded in another web page.

We are roughly 15% towards that vision, which requires a lot of different parts working together. Most importantly, the transfer of data and media assets between machines should be as efficient as possible, and has not been done at all until now.

WebAssembly port

Right now, one can make very simple scores in our web prototype, but this is not useable in any production facility. We would like to be able to export scores using sound, video, Faust plug-ins, … as a WASM object which could then be embedded in a web page.

Collaborative edition and distributed execution

These capabilities have been prototyped but not kept up to date with the rest of the software. A recent contract work has helped making the codebase compile again, it remains to fix the synchronisation issues between computers.

Contributing to ossia score

ossia is a free software project at its core, and the free software values we hold dearly.

As such, we are open to contributions from everyone interested in improving it: if you want to start contributing to score and don’t really know where to look, come to the chat and we will do our best to mentor you !

Thanks for reading, and have fun with the beta !